I recently received a request to modify one of our intranet applications so that users would have any easy way of inserting links into a textarea. Due to how we use the data in the text area I didn't want to implement a full WYSIWYG editor, so I had to come up with another solution.

The users that will be adding links are power users, but they understandably didn't want to insert the links manually. They also wanted to select from a list of links that are defined in a different part of the application. So, I just added a little bit of code that shows a select box with all the possible links when text is selected in the textarea. When the users selects a link option, the textarea is updated to contain the HTML code necessary to display a link that opens in a new window.

Below is the code that I use to get the selection and insert the link. It is somewhat crude, yet effective.

<script type="text/javascript"

src="jquery-1.3.2.min.js"></script>

<script type="text/javascript">

$(document).ready(function(){

/**

* Gets the start and end position for the selection

* @param {string} pSelectArea The texatarea

* to get the selection from (jQuery selector)

* @return An object with the parameters start and end

* @type object

*/

function getSelectionRange(pSelectArea){

// if we are in IE

if(document.selection){

var tBookmark =

document.selection.createRange().getBookmark();

var tSelection =

$(pSelectArea).get(0).createTextRange();

tSelection.moveToBookmark(tBookmark);

var tBefore =

$(pSelectArea).get(0).createTextRange();

tBefore.collapse(true);

tBefore.setEndPoint("EndToStart", tSelection);

var tBeforeLength = tBefore.text.length;

var tSelectionLength = tSelection.text.length;

tSelectStart = tBeforeLength;

tSelectEnd = tBeforeLength + tSelectionLength;

}else{

tSelectStart = $(pSelectArea).get(0).selectionStart;

tSelectEnd = $(pSelectArea).get(0).selectionEnd;

}

return {start: tSelectStart, end: tSelectEnd};

}

/**

* Inserts a link into text between a start and end point

* @param {string} pText The text to insert the link into

* @param {string} pLink The link to insert

* @param {integer} pStart The starting location

* @param {integer} pEnd The ending location

* @return The new text with the link

* @type string

*/

function insertLink(pText, pLink, pStart, pEnd){

tText01 = pText.substr(0, pStart);

tText02 = "<a target='_blank' href='" + pLink + "'>";

tText03 = pText.substr(pStart, pEnd-pStart);

tText04 = "</a>";

tText05 = pText.substr(pEnd);

return tText01 + tText02 + tText03 + tText04 + tText05;

}

var selection = {start:0,end:0};

// when a selection is made grab the

// range and show the link select

$("#MY_TEXTAREA").select(function(){

selection = getSelectionRange("#MY_TEXTAREA");

$("#MY_SELECT").fadeIn(1000);

});

// when the select changes add the link

$("#MY_SELECT").change(function(){

if(selection.end>selection.start){

var tNewText = insertLink(

$("#MY_TEXTAREA").val(),

$(this).get(0).value,

selection.start, selection.end);

$("#MY_TEXTAREA").val(tNewText);

}

});

});

</script>

Comments (newest first)

continue reading

Ronald Reagan once said, "The best minds are not in government. If any were, business would hire them away." But, I'm not so sure that holds completely true. When it comes to adopting more efficient information technologies, it seems that government is leading the way, at least when compared to private organizations of similar size.

Where I work I have advocated the use of more efficient technologies, or technologies that present the best value to the company. In order to

conserve money in these difficult times I have suggested switching to either open source technologies or

cloud computing solutions. But, so far my ideas have been brushed aside as being too radical or too risky. In fairness, I didn't invent the ideas that I have presented. I just advocate the use of technologies which I have seen successfully implemented elsewhere.

I just recently read about how the

French National police have saved millions by switching to Ubuntu from Windows. The organization had previously stop using Microsoft Office products in favor of the more economical OppenOffice, so they were familiar with open source software. Originally planning to upgrade to Vista, they decided on switching to Ubuntu because of the dramatic costs savings. They plan on moving all of their 90,000 computers to Ubuntu Linux by 2015. Their goal for this year alone is to migrate 15,000 computers from Windows to Linux, and have completed 5,000 so far. In general it would seem that the French government is serious about reducing IT costs through adopting open source technologies, they have multiple federal agencies using those types of technologies.

There are signs of change here in the United states as well. As CTO of Washington D.C. Vivek Kundra successfully implemented the use of Google Apps, an application-domain cloud computing service. Those actions not only saved the district hundreds of thousands of dollars, but also increased the efficiency and transparency of the organization. And now that visionary leader is the nation's CIO, so I look forward to broader applications of open source software.

Open source software has been used by the federal agencies in the United States for a while now, but it has mostly been limited to high-tech sections of government. The NSA has developed their own enhancements to Linux in order to create an ultra-secure computing environment. The FBI uses LAMP (Linux, Apache, MySQL, PHP/Perl/Pthon) to power their emergency response network. The Library of Congress uses Linux clusters to digitize the historic documents collections. And NASA was probably the first to adopt the use of open source software, they used Debian Linux to control space shuttle experiments on mission STS-83 in April of 1997. With Mr. Kundra in charge of national IT we may see the use of open source and cloud computing expand greatly.

I do see some open source software being used in business, but it is usually by engineering groups and not officially sanctioned by IT. Our IT organization does not posses the necessary skill sets to support technologies like Linux. I would suggest that if business leaders want to reduce their information technologies expenditures without reducing service levels, they should seek out IT leaders who are familiar with alternative technologies and not afraid to break the status quo.

Government has a bad reputation for being inefficient, but at least from a technology perspective that does not really seem to be the case. It looks as if governments around the world are adopting efficient, best-value technologies at a faster pace than private sector organizations. If the best minds are not in government, then they must be hiring the best consultants.

continue reading

Occasionally I will receive some unusual SMS messages on my cell phone from numbers that I don't recognize. Some of the text messages seem to be questions, while others are locations or cryptic statements. At first I thought that they could be some sort of SMS spam. Latter I started to think that my phone number might just be similar to that of some very popular person, and their friends were mistyping the number. In any case I would just delete the message and not think much of it.

Today I received the message "define: paraphyletic". Then it finally struck me that I must have a number that is similar to one proved by some sort of SMS answer service. Sure enough, if you

type Google on a phone, the numbers are 466453. If another number is accidentally inserted in the right place, it becomes my phone number.

Now that I know this I can have some fun with people who mistype Google into their phone.

continue reading

I just downloaded

iBlogger for my iPhone today, which is where I am writting this post from, and I have some mixed feelings about it. So far it has functioned as advertised, yet it has some quirks.

It is definitely better than posting via email. I always seemed to have problems with line breaks when posting from email, so this is step in the right direction. The editor works well. I don't mind the lack of horizontal typing, but that has never really been my preference. And, due to the limitations of the mobile interface, you can't format your text (font size, bold, etc.), but that isn't the applications fault. This is also probably why XHTML markup is shown when editing existing posts. One other limitation for Blogger users is the inability to post photos.

So, it does work well for new posts. Working with old posts is problematic. When it initially loaded my existing blog posts, it took the load time and date rather than the time and date the article was actually posted. So, posts from a week ago were dated today. I thought that this was no big deal, but when I edited a post it saved that date and time instead of the original (or even instead if the current). Therefor, when I corrected a typo in a post from last week it changed the time to this morning, effectively making it the most recent post.

One other curiosity is on the blog posts screen. The scrolling is not fluid, it's slow to scroll and feels like it must be doing a lot of work in the background.

I do like the ability to add tags, the interface is clean and efficient. There is also a neat feature that allows you to create a link to Google maps of your current location. And there is an option to automatically add a signature, though the default signature contains a link to the iBlogger website, which is a mild irritant for a paid application. The signature is enclosed in a div, so I think that you could customize the style of it.

You can also add links. It is a little awkward to use, but it gets the job done. It seems to add the links at the bottom, so when you are editing an article you would want to exit edit mode at the spot where you wanted the link. Add the link and go back into edit mode and clear away the unneeded spacing.

In conclusion, I think that I will continue to use iBlogger to add new posts when I am on the go, but I am hesitant to use it for updating existing posts due to how it changes the timestamps.

continue reading

These instructions may be specific to my company's SAP environment, I am not really sure since I have minimal exposure to SAP outside of my company's environment. And, I am not a true SAP expert, so your results may vary.

When our shipping department creates a delivery in SAP we want to automatically generate printouts, such as for the shipping labels. We are able to accomplish this through the use of message conditions. When the delivery is created SAP checks the shipping point and the sold to party to determine if it should send messages to other parts of the system for further actions to be completed. Here I am only concerned with the print messages.

Procedure to view existing message conditions

Prior to making any changes I usually like to examine the current state, and this is the procedure to accomplish this. In transaction VV23, enter the output type (ZLnn), and then select key combination. In the key combination dialog select "shipping pt/sold-to P.". Next, there is another entry screen where I can select the "shipping point/receiving pt" or the "ship-to party". Once the desired criteria is entered and execute (F8) is clicked, all of the message conditions for the desired output type are shown according to the selected shipping information.

Procedure for creating a new message condition

The first steps for creating a new message condition are the same as for viewing, the primary difference is the initial transaction code. In transaction VV22, enter the output type (ZLnn), and then select key combination. In the key combination dialog select "shipping pt/sold-to P.". Next, there is another entry screen where I can select the "shipping point/receiving pt" or the "ship-to party". Once the desired criteria is entered and execute (F8) is clicked, all of the message conditions for the desired output type are shown according to the selected shipping information. Here it is possible to add another message conditions. Add a new line and enter the "Ship.point", the "Sold-to pt", the medium (1 for printout), the dispatch time, and the language. Once that is completed, the communication button is used to determine where the output will go. Select the output device or printer name, and the number of messages to send (the number of copies). Normally, we also select the "Print immediately" check box. Finally, click the save button store the new message condition.

Conclusions

Notice that the transactions are VV2n, and the V2 conditions are for shipping. So, if I want to modify handling unit message conditions which are type V6, I use the transaction VV62 (or VV63 to view the conditions. It's nice how these match up like that. Along those same lines there is: VV13 for sales outputs, VV33 for billing outputs, VV53 for sales activities, and VV73 for transportation.

continue reading

I just finished reading a white paper from researchers at

UC Berkeley's RAD Lab entitled "

Above the Clouds: A Berkeley View of Cloud Computing", and my interest in cloud computing has been reinvigorated.

For a while I have believed that web based applications would eventually surpass locally installed software. Around ten years ago when

Macromedia Flash 3 was released with JavaScript support I thought that I would be able to build some great online applications, but the technology really wasn't quite ready. Now, with technologies such as AJAX and client-side browser storage, the concept may be nearing critical mass. Seeing what

Vivek Kundra did for Washington D.C. as their

CTO certainly made me feel that large organizations could be ready for cloud computing. But, I know that before private corporations can implement the technology they must first understand it, which is why I feel this white paper is valuable.

The Berkeley paper establishes definitions that bound the scope of the associated technologies, which is very important. It frequently seems to be the case that whenever new technological ideas are being discussed and formed, vendors simply print out new stickers to slap on existing products in order to market them as leading edge technologies. And, if you consider that the use of cloud computing could reduce the number or size of data centers at major corporations, then you can start to understand why computer manufacturers could be threatened by this disruptive concept. It seems reasonable to me to expect that cloud computing providers would find efficiencies that could effectively cut into the lucrative corporate maintenance agreements that server manufacturers love. So, the paper offers knowledge for IT managers to defend themselves against vendor claims.

After the in depth

definition of what cloud computing is and explaining why it's time has come, the paper goes on to present some obstacles and opportunities. The third obstacle, data confidentiality and

auditability, really struck me. This has been the most common contradiction I have heard to the cloud computing concept. I have long felt that certain

SaaS applications, such as Gmail, have provided better protection than similar in-house corporate solutions. But, when I present that idea the follow up response is usually that the company would loose control of their data. I usually quit debating at that point, as it seems to be a sort of straw man argument. And, arguing against their point would be viewed as criticizing their infrastructure or staff, and that would immediately make them oppose the idea even more.

I feel that in the long run some companies, probably smaller ones, will switch to cloud computing to reduce their overhead, thereby gaining a competitive advantage. Then, the larger companies will be forced to think hard about the value of controlling their own data. Maybe a recession will help to accelerate this process.

That is the part of cloud computing that I enjoy the most, the idea that cloud computing can effectively level the playing field for smaller, more agile software development companies. And, I think that this will further encourage innovation. I know that I will certainly continue to write applications for

Google's App Engine, and maybe someday I will develop a real crowd

pleaser.

continue reading

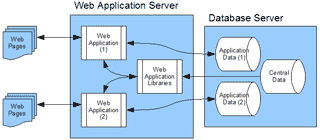

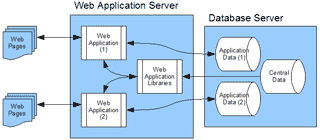

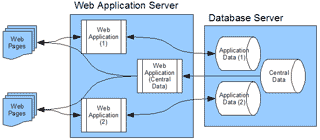

Where I work we have a web application server that runs numerous business applications which are all connected back to a database server. Each application has it's own database and also shares read access to a database containing central data such as personnel and department information. The system, which I inherited, works pretty well, but it's architecture seems to be somewhat dated. As a part of my continuous improvements, I intend to start reshaping it.

With the current architecture, each web application manages all data access that it needs. Even access to the central data is managed by the specific application. There are some libraries that we have in place to ease the management of this, but the situation can still get difficult when we have to change the format of data in our central repository. Up to this point we have not had any data in the central database that could not be shared amongst all applications, but at some point in the future this may be necessary and would be hard to manage under the current situation.

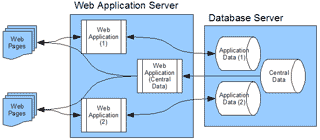

It would be nice to move to a more service oriented architecture (SOA) approach in general, but the current economic conditions have put a damper on innovative solutions that could be costly or are even perceived as being potentially costly. So, I have been contemplating taking just the intranet systems that I am responsible for and implementing a web services based SOA approach.

It seems that this approach would be fairly easy to implement with each incremental improvement we do to the systems. First, we would write a web service provider, or a web application that serves no other purpose than accessing the central data. This application would then be responsible for controlling access to the central data. Then, as we make improvements to the existing applications we remove the portions of code that allow for direct access to our central data and replace it with calls to the new web service. The applications will still store the data (data keys) back to their respective databases, just the selection lists and details will come from the central data web service.

continue reading

Yesterday I wrote a post describing why I decided to

switch from MooTools to jQuery, and today I have my first small comparison between the two. For one project that I am working on I have a simple autocomplete text input, and below I will show how I completed this little task in both MooTools and jQuery.

I am using plugins for both of the frameworks in order to accomplish this task, so this doesn't truly compare the functionality of the framework. But, this is a common task that I have and it starts to give me a feel for how the two match up.

The data returned from the server side script is different for each of these two methods. The MooTools Method gets an array returned, whereas the jQuery method gets the results returned as a newline delimited file (one autocomplete suggestion per line).

MooTools<link href="mootools/autocomplete/Autocompleter.css"

rel="stylesheet" type="text/css" />

<script type="text/javascript"

src="mootools/mootools-1.2.1-core-yc.js">

</script>

<script type="text/javascript"

src="mootools/autocomplete/Observer.js">

</script>

<script type="text/javascript"

src="mootools/autocomplete/Autocompleter.js">

</script>

<script type="text/javascript"

src="mootools/autocomplete/Autocompleter.Request.js">

</script>

<script type="text/javascript">

document.addEvent('domready', function() {

var networkcompleter =

new Autocompleter.Request.JSON('ac-network', '../list/network', {

'minLength': 2,

'indicatorClass': 'autocompleter-loading',

'postVar': 'q'

});

});

</script>

<link rel="stylesheet" type="text/css"

href="jquery/jquery.autocomplete.css" />

<script type="text/javascript"

src="jquery/jquery.js"></script>

<script type='text/javascript'

src='jquery/jquery.bgiframe.js'></script>

<script type='text/javascript'

src='jquery/jquery.autocomplete.js'></script>

<script type="text/javascript">

$().ready(function() {

$("#ac-network").autocomplete('../list/network', {

minChars: 2

});

});

</script>

<input type="text" class="textlong" id="ac-network" name="ac-network" />

Update: 2009-03-31

While reading through the jQuery Blog, I found a nice plugin that is a “replacement for html textboxes and dropdowns, using ajax to retrieve and bind JSON data”. In other words, it's an autocompleter or suggest box. It is from Fairway Technologies and called FlexBox. It seems to be very powerful, check out some of the FlexBox demos.continue reading

You can also select Insert > New Text Area from the menu.